You can find below our recently accepted work in Elsevier Neurocomputing, entitled “Self-supervised visual learning in the low-data regime: A comparative evaluation” and authored by S. Konstantakos, J. Cani, I. Mademlis, D. I. Chalkiadaki, Y. M. Asano, E. Gavves and G. Th. Papadopoulos.

Link: https://www.sciencedirect.com/science/article/pii/S0925231224019702?via%3Dihub

This paper constitutes a collaborative work between the Department of Informatics and Telematics, Harokopio University and the University of Amsterdam.

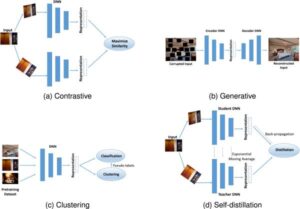

Self-Supervised Learning (SSL) is a valuable and robust training methodology for contemporary Deep Neural Networks (DNNs), enabling unsupervised pretraining on a ‘pretext task’ that does not require ground-truth labels/annotation. However, despite the relatively straightforward conceptualization and applicability of SSL, it is not always feasible to collect and/or to utilize very large pretraining datasets, especially when it comes to real-world application settings.

The above situation motivates an investigation on the effectiveness of common SSL pretext tasks, when the pretraining dataset is of relatively limited/ constrained size. In particular, this work briefly introduces the main families of modern visual SSL methods and, subsequently, conducts a thorough comparative experimental evaluation in the low-data regime, targeting to identify:

- What is learnt via low-data SSL pretraining

- How do different SSL categories behave in such training scenarios

Interestingly, for domain-specific downstream tasks, in-domain low-data SSL pretraining is shown to outperform the common approach of large-scale pretraining on general datasets.