You can find below our recent work, entitled “Visual Hand Gesture Recognition with Deep Learning: A Comprehensive Review of Methods, Datasets, Challenges and Future Research Directions” and authored by K. Foteinos, J. Cani, M. Linardakis, P. Radoglou-Grammatikis, V. Argyriou, P. Sarigiannidis, I. Varlamis and G. Th. Papadopoulos.

Link: https://arxiv.org/pdf/2507.04465

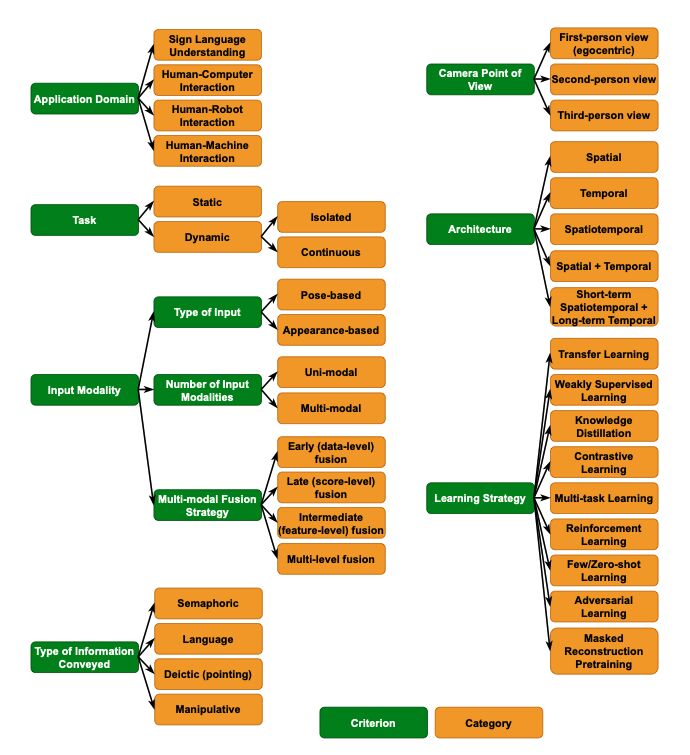

This study provides a comprehensive overview of the field of Visual Hand Gesture Recognition (VHGR) using deep learning methodologies, emphasizing on the following aspects:

- Identification and organization of key VHGR approaches, using a taxonomy-based format in various dimensions, such as input modality and application domain

- In-depth analysis of state-of-the-art techniques across three primary VHGR tasks: static gesture recognition, isolated dynamic gestures and continuous gesture recognition

- For each task, the architectural trends and learning strategies are detailed

- Review of commonly used datasets (emphasizing on annotation schemes)

- Evaluation of standard performance metrics

- Identification of major challenges in VHGR, including both general computer vision issues and domain-specific obstacles

- Outline of promising directions for future research